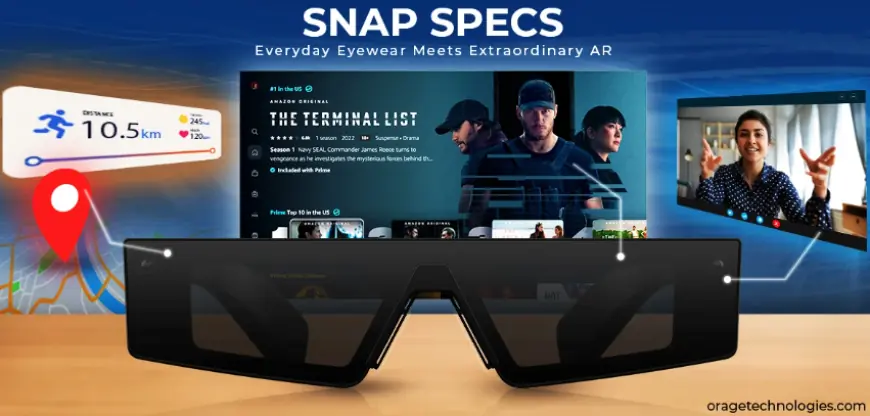

Snap Specs: Redefining Reality with AR Eyewear

Discover how Snap Specs, launching in 2026, transform daily life with AR eyewear. This guide explores their features, AI capabilities, and limitations, with FAQs to highlight their role in redefining reality through augmented reality.

Snap Inc. is launching AR (augmented reality), paired with its lightweight, stand-alone AR glasses - Snap Specs in 2026 - redesigning our world. AR technology is compressing our sense of reality with stylish glasses integrated with the latest digital technology - layering digital content on top of the real world social experience. Snap Specs make AR engaging and easy to access for everyday users, whether to improve social interactions or daily tasks in one's life. This blog post analyzes Snap Specs's impact with key questions that show their features and future potential, and an FAQ section that attaches common questions to Snap Specs.

What are Snap Specs, and what sets them apart?

Snap Specs are Snap Inc.'s sixth generation of AR glasses, which are designed for consumers and are focused on wearability and immersive experiences. Unlike their developer-only predecessors, Snap Specs are designed with see-through lenses and integrated waveguide optics that allow for vibrant digital overlays without blocking the user's view. By relying on Snap OS, the specs leverage advanced AI and gesture capabilities with a 46-degree field of view, which allows reality and digital experiences to exist simultaneously.

For example, users could see a real time navigation cue while playing interactive AR games while also maintaining eye contact with other people. With the amplified design and privacy-centered on-device processing, Snap Specs are ideal for a more accessible AR experience.

In what ways does Snap Specs fuse AR into everyday activity?

Snap Specs allow individuals to enhance everyday activity by layering relevant digital information onto the physical world. Users can access AR content with the transparent lenses of Snap Specs while maintaining an awareness of the world around them, allowing for more instinctual interactions with AR. For example, travelers refer to the “Super Travel” Lens to seamlessly translate foreign signs, while home cooks can follow along as the "Cookmate" Lens shows each step in recipe guidelines.

Furthermore, Snap Specs enable web browsing on a virtual screen and provide AI-enabled suggestions for activities, such as how to line up a pool shot. By seamlessly layering AR into activities we do every day, Snap Specs make technology feel more intuitive, natural, and less like it has an "overlay."

What Technology is Involved in Snap Specs?

Snap Specs throw in an impressive array of technology inside a streamlined 226-gram package. The Specs contain two full-color cameras, two infrared cameras, and a six-axis IMU to handle spatial understanding. With a 46-degree field of view (FOV), and a resolution of 37 pixels-per-degree, Snap Specs provides a display comparable to a 100-inch screen viewed at 10 feet. Dual Snapdragon processors with vapor chambers, like those mentioned in our Armature tutorial, provide the ultimate visual excursion without causing too much overheating.

The Specs also have stereo speakers, a six-microphone array for voice commands, and liquid crystal on silicon micro-projectors for bright, better-than-full-color imaging. All this is to provide gestures and voice interactions while using the Specs, while remaining simple to use. Snap Specs is a tremendous engineering feat with a simple and uncomplicated user experience.

How Do Snap Specs Facilitate Social and Creative Experiences?

Snap Specs serve as a strong social and creative platform and utilizes Snap's use of AR through Snapchat. With millions of lenses accessible through Snapchat, Snap Specs can tap into a large AR content library with over 400,000 lens creators having created over 4 million lenses on Snapchat. One way users can utilize Snap Specs is to create shared Lens avatars with their friends to create collaborative digital murals. Additionally, users can play multiplayer games that leverage the AR capabilities of Snap Specs, such as golf.

Lastly, Snap Specs allows creators to create 3D assets in the moment using the Snap3D API and encourages content creation. All these elements provide a fun avenue for social connection and creative experience with Snap Specs.

What Role Does AI Play in Snap Specs?

AI is fundamentally driving the functions of Snap Specs to understand and act in the world at large. With capabilities powered by OpenAI and Google’s Gemini, Snap Specs use on-device audio and visual processing capabilities to harness accuracy, privacy, and performance. MyAI, the AI assistant can also react to requests for information, multilingual translations or provide direct guidance in real-time to help users learn musical instruments under “coaching” mode.

In addition, the Depth Module API enables deep anchoring of digital objects on 3d anchor points - enabling the app “Drum Kit” to overlay the cues on their physical drum sets. There is an for the on-device AI to provide spatial awareness in potentially any application. This makes Snap Specs very flexible and capable of contextualizing physical and digital spaces simultaneously.

How Do Snap Specs Tackle Privacy Issues?

Snap Specs build privacy into their design and address an issue that is pervasive for many other types of wearable technology. Snap Specs mitigate the privacy dependence on external servers by using dual Snapdragon processors which keep data processing on-device (a step towards data breaches being minimal). There's also a Snap Remote Service Gateway in Snap Specs to assist with security measures by limiting data transfer.

For example, advertising some features that required the camera and not personal information or video. For features such as menu translation or object recognition, Snap Specs makes calculations on-device, while on-device does not store footage of any kind of it being processed. We want users to have augmented reality experiences with confidence - free of worries. This and more features make Snap Specs an aspirational product for privacy-based system users.

How do Snap Specs solve privacy concerns?

For Snap Specs, privacy is a significant point in addressing privacy, which is already commonly seen as a problem in wearable technology. By processing the information very locally using dual Snapdragon processors, the glasses won't have to off-load everything to a server, ultimately limiting potential data leaks that often happen when a company hits a server. The Remote Service Gateway owned by Snap further limits the amount of data to be captured by only having meta data on the required functions.

For example, when they are doing the menu translation or object recognition, the information is processed in real-time without ever holding raw footage. This way users can feel a little less anxious and have AR experiences the way they would want to without compromising too much on their privacy by exposing their raw history. Therefore, Snap Specs have established themselves as a compliant option within the AR ecosystem.

What Are the Limitations of Snap Specs?

Nevertheless, Snap Specs are not without their limits. The 45-minute battery life is ideal for short sessions but not for a prolonged performance. With a 46-degree field of view, these specs are immersive; however, that field of view is less than some competitors in the space, such as Meta’s Orion. Also, while the gesture controls are great, the response time may lag, resulting in needing multiple tries to get accurate gestures.

Additionally, the Snap Specs are lighter than previous versions, but they may still feel bulky for some users. Snap understood these limitations before announcing them, marketing these specs as a playful, short-term engagement, rather than production long-haul engagement, and is looking to improve performance before they officially drop in 2026.

How Do Developers Drive the Ecosystem of Snap Specs?

Snap Specs benefit from Snap’s advanced developer ecosystem, featuring over 250,000 Lens Creators, who have made 2.5 million Lenses that have been viewed 3.5 trillion times. The new tools, such as Depth Module API, Automated Speech Recognition, and Snap3D API, allow developers to create complicated AR experiences -- such as real-time translation or interactive tutorials to help with processes.

Snap has also partnered with Niantic Spatial to increase location-based AR to bring about guided tours or museum experiences to life. In addition, with the integration of OpenAI and Google’s Gemini, developers can create multimodal Lenses, giving Snap Specs a fully stacked apps catalog when they launch.

Frequently Asked Questions About Snap Specs

What Are Snap Specs, and Who Can Use Them?

Snap Specs are consumer AR glasses launching in 2026, designed for everyday users. They overlay digital content onto the real world, supporting tasks like navigation, gaming, and content creation, making them ideal for social and practical use.

How Do Snap Specs Differ from Other AR Glasses?

Snap Specs are lighter and more stylish than competitors like Meta’s Orion or Apple’s Vision Pro, focusing on short, playful AR sessions. Their Snap OS and developer ecosystem provide a unique range of social and creative Lenses.

What Is the Battery Life of Snap Specs?

Snap Specs offer a 45-minute battery life, optimized for brief, engaging sessions like gaming or translation. They’re designed for burst usage rather than continuous wear.

Are Snap Specs Comfortable for Daily Use?

At 226 grams with a slim design, Snap Specs mimic regular eyewear, ensuring comfort for short-term use. However, some users may find them slightly bulky for extended wear.

Can Developers Build Apps for Snap Specs?

Yes, Snap’s Lens Studio supports developers in creating AR Lenses, with tools like the Snap3D API and integrations with OpenAI and Gemini. Over 400,000 creators are already building for Snap Specs.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0