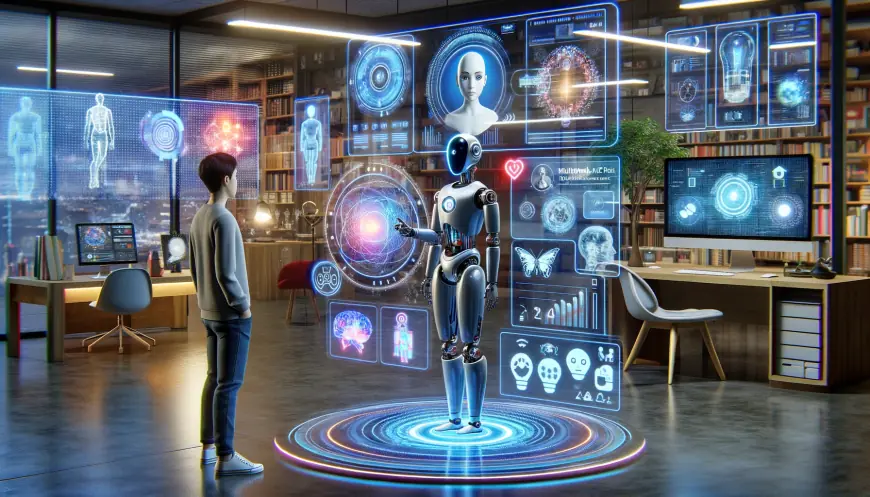

What Exactly Are Multi-Modal AI Agents?

Artificial intelligence is undergoing a significant transformation, moving beyond the confines of single-sense data.

Artificial intelligence is undergoing a significant transformation, moving beyond the confines of single-sense data. We're all familiar with chatbots that process text or image recognition software that analyzes pictures. But what if an AI could do both simultaneously and then some? This is the fascinating world of multi-modal AI agents.

Think of it this way: humans are inherently multi-modal. When we look at a picture of a dog, we don't just see the image; we might also hear a bark in our minds, feel the texture of its fur, or remember the scent of a wet dog after a rainstorm. Our brains seamlessly integrate information from different senses to create a holistic understanding of the world. Multi-modal AI agents are designed to mimic this ability, processing and understanding information from various sources like text, images, audio, video, and even haptic feedback.

So, what does this look like in practice? Imagine a user uploading a photo of a broken appliance and simultaneously providing a voice note describing the problem. A multi-modal AI agent could analyze the visual evidence from the photo (the specific part that's broken), and combine it with the user's verbal description to accurately diagnose the issue. It could then generate a text response with a step-by-step repair guide, or even a video tutorial, all within a single interaction.

This is a powerful leap forward from traditional AI, which typically operates in silos. A simple text-based chatbot would be unable to process the image, and a simple image recognition system would be unable to understand the nuance of the user's voice note. The multi-modal agent bridges this gap, creating a more comprehensive and natural user experience.

The Core Components of a Multi-Modal Agent

Creating these sophisticated agents requires a combination of several key technologies. At its heart, a multi-modal agent relies on a fusion of different AI models.

1. Data Fusion: The first and most critical step is the ability to fuse data from different modalities. This isn't as simple as just stacking the data on top of each other. The AI needs to learn how to relate the information from an image to the information in a piece of text. For instance, it needs to understand that the word "cat" in a sentence corresponds to the image of a cat in a photo. This is achieved through complex neural networks that are trained on massive datasets of paired information (e.g., millions of images with corresponding text descriptions).

2. Unified Representation: Once the data is fused, the AI creates a unified representation of that information. This is a single, abstract data structure that encapsulates the meaning from all the different modalities. Think of it as a single, multi-faceted "thought" that represents the user's input, regardless of whether it came from text, audio, or an image. This unified representation allows the AI to reason and generate a coherent response that takes all the input into account.

3. Action and Response Generation: Finally, the agent uses this unified representation to perform an action or generate a response. This response itself can be multi-modal. For example, it could generate a text-based answer, create an image, or even synthesize a voice response. The agent's ability to choose the most appropriate output modality for a given task is a key part of its intelligence.

The Multi-Modal Revolution in Action

The potential applications of multi-modal AI agents are vast and span across numerous industries.

-

Customer Service: As seen in our appliance example, these agents can revolutionize customer support. They can analyze images of products, understand voice commands, and provide rich, multi-modal solutions, leading to faster and more accurate problem-solving. This could significantly reduce the need for human intervention in many support scenarios.

-

Healthcare: Imagine a doctor uploading an X-ray image and a patient's symptoms via a voice memo. A multi-modal agent could analyze the image for anomalies, cross-reference them with the patient's symptoms, and suggest potential diagnoses to the doctor. This is not about replacing doctors but augmenting their capabilities with powerful tools for analysis and information synthesis.

-

E-commerce: Multi-modal agents can create highly personalized shopping experiences. A user could upload a photo of a shirt they like and say, "Find me a similar shirt in blue, but with a different collar." The agent could then search its database using both the visual and verbal cues to provide a curated list of relevant products.

-

Education: These agents can transform learning. A student could ask a question about a diagram in their textbook by pointing to it on their screen and asking, "What is this part and what does it do?" The agent could then provide a detailed explanation with an animated video, a text summary, and even a quiz to test their understanding.

The Future is Multi-Modal

As we move forward, the development of these agents will become increasingly crucial. The demand for more natural and intuitive human-computer interaction is driving innovation in this space. Creating truly effective multi-modal agents requires a deep understanding of not just individual AI models but also how to effectively integrate them.

ai agent development services are becoming a core offering for many forward-thinking ai development company businesses. They are helping organizations build these complex systems from the ground up, leveraging their expertise in areas like natural language processing, computer vision, and machine learning. As the technology matures, we can expect to see even more sophisticated multi modal ai agent development that can understand and respond to the world in a way that is truly intuitive and human-like. The future of AI is not just about understanding words or pictures in isolation; it's about understanding the world in its entirety, and that future is multi-modal.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0