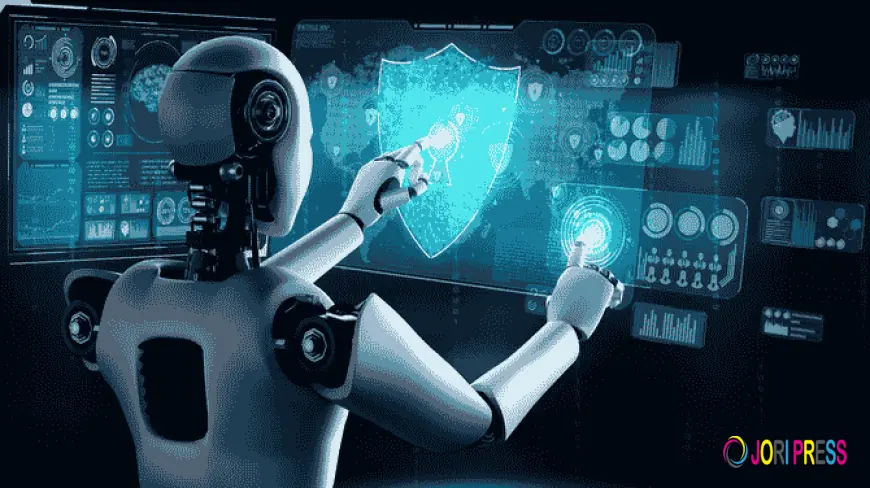

Cybersecurity Regulations of AI and Risk Management

The Future of Cybersecurity Regulations of AI in 2026 explores how global laws, ethical standards, and business strategies are evolving to address AI-driven cyber threats, accountability, and compliance in a rapidly changing digital landscape. Explore the Future of Cybersecurity Regulations of AI in 2026 and learn how global policies, ethics, and business strategies are reshaping AI-driven security frameworks.

The rapid integration of artificial intelligence into digital infrastructures is fundamentally transforming how cybersecurity is designed, enforced, and regulated worldwide. As organizations increasingly rely on AI-driven systems to manage sensitive data, automate decisions, and predict threats, regulators are facing unprecedented challenges. The Future of Cybersecurity Regulations of AI in 2026 reflects a global effort to balance innovation with protection, ensuring that intelligent systems strengthen security without introducing uncontrollable risks.

Artificial intelligence has become a core component of modern cybersecurity strategies. Machine learning algorithms now detect anomalies faster than human analysts, automate threat responses, and continuously adapt to emerging attack patterns. While these capabilities improve resilience, they also expand the attack surface. Malicious actors are exploiting AI to create advanced phishing campaigns, autonomous malware, and deepfake-driven social engineering attacks. This escalating arms race is forcing regulators to reconsider how cybersecurity laws apply to systems capable of independent decision-making.

The Future of Cybersecurity Regulations of AI in 2026 highlights a decisive move away from static compliance models toward adaptive regulatory frameworks. Traditional cybersecurity regulations were designed for predictable, rule-based systems managed directly by humans. AI systems, however, evolve continuously, making it difficult to assess risk using outdated legal standards. Regulators are now focusing on lifecycle-based oversight, requiring organizations to evaluate AI security risks from development through deployment and ongoing operation. This approach ensures that vulnerabilities introduced by algorithmic updates or data changes are addressed proactively.

Global policy alignment is becoming a defining factor in AI cybersecurity governance. Governments recognize that cyber threats do not respect national borders, especially when powered by AI. By 2026, international cooperation is expected to intensify, with shared standards for AI transparency, data protection, and automated decision accountability. Regional initiatives are influencing one another, creating a more unified regulatory landscape. Analysis from Business Insight Journal emphasizes that harmonized policies reduce compliance complexity while limiting regulatory gaps that attackers exploit.

Accountability is emerging as one of the most critical regulatory priorities. When an AI-driven security system makes a faulty decision or fails to prevent a breach, determining responsibility becomes complex. Future regulations are expected to clearly define liability across developers, vendors, and deploying organizations. Mandatory documentation of AI decision logic and security testing protocols will help regulators audit incidents effectively. This shift promotes responsible AI innovation while discouraging the deployment of opaque systems that cannot be explained or controlled.

For businesses, the regulatory evolution surrounding AI cybersecurity represents both a challenge and an opportunity. Compliance will require investment in governance structures, risk management frameworks, and skilled personnel capable of managing AI security responsibly. Organizations that adapt early will gain competitive advantages by building trust with customers and partners. Coverage in BI Journal frequently highlights that regulation-ready enterprises are better positioned to scale AI initiatives without disruption, avoiding costly penalties and reputational damage.

Ethical considerations are becoming inseparable from cybersecurity regulation. As AI systems monitor behavior, analyze communications, and automate surveillance, concerns around privacy and misuse are intensifying. Regulators are expected to mandate ethical impact assessments to ensure AI security tools respect fundamental rights. These measures aim to prevent discriminatory outcomes, excessive data collection, and unauthorized monitoring. The Future of Cybersecurity Regulations of AI in 2026 places strong emphasis on aligning security objectives with ethical responsibility, reinforcing public confidence in intelligent systems.

Industry intelligence platforms are playing a crucial role in helping organizations navigate this complex regulatory environment. Trusted sources such as Business Insight Journal provide in-depth analysis of policy developments, emerging threats, and best practices for compliance. Staying informed allows cybersecurity leaders to anticipate regulatory changes rather than react to them. Many professionals turn to curated knowledge communities like Business Insight Journal - Inner Circle to gain strategic insights that support long-term AI governance planning.

Looking ahead to 2026, preparation will be the defining factor in successful AI cybersecurity adoption. Organizations must prioritize flexible security architectures capable of adapting to regulatory updates and evolving threats. Continuous training, cross-functional collaboration, and transparent AI governance models will become standard requirements. The Future of Cybersecurity Regulations of AI in 2026 signals a pivotal moment where innovation and regulation converge, shaping a digital environment that is both intelligent and secure.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0